This blog post was originally published at Cadence's website. It is reprinted here with the permission of Cadence.

Jeff Bier heads up the Embedded Vision Alliance (and, in his day job, he heads up BDTi). His own presentation was titled 1000X in 3 Years: How Embedded Vision Is Transforming from Exotic to Everyday. His main point is that we are living in a time of unprecedented progress and the cost and power consumption of vision computing will decrease by about 1000X in the next three years.

As he put it, "the future's so bright you've gotta wear shades." Or maybe they are Bier goggles! Let's see where the numbers come from.

Deep Neural Networks

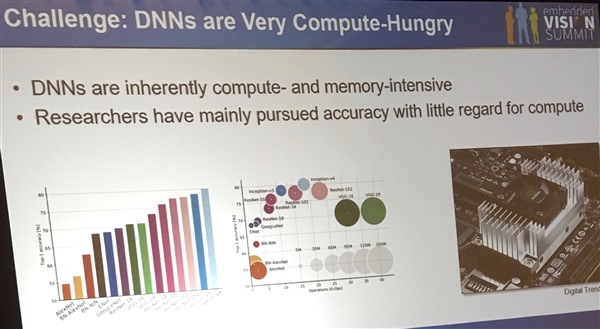

Deep Neural Networks (DNN) have progressed fast. Vision using traditional methods had incrementally improved for years, and then with DNN two things happened. There was a sudden big improvement, and the rate of improvement also increased. However, they are very compute and memory intensive since researchers have generally pursued accuracy with little regard for the amount of computation required. That was what academics get rewarded for. DNN are becoming the dominant approach for all types of challenging perception tasks, as opposed to before when different tasks were attacked with different approaches.

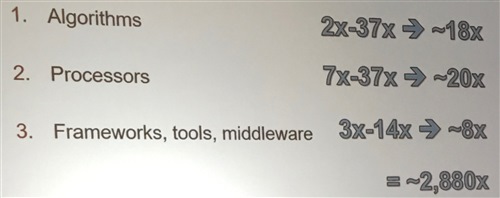

The focus has now switched to how to make these algorithms better, not just in terms of accuracy, but also efficacy of implementation. Jeff took care to emphasize that he's not relying on Moore's Law for his 1000X. He didn't want to get into Moore's Law being over or not, but said that for this presentation we could all agree that it was. The 1000X comes from three installments of 10X:

- 10X algorithm efficiency (not accuracy) improvement

- 10X by improvement of processors being used

- 10X by improvement of frameworks, tools, middleware

Improving Efficiency

The top labs have plenty of computing power but now product developers are focusing on deployment, not just publishing papers, and they are finding lots of ways to improve efficency.

Probably the biggest switch is from 32-bit floating point, which is typically used for training and for algorithm development, since NVIDIA GPUs are the engine of choice, and they use 32-bit floating point. But a huge increase in efficiency, with no to little decrease in accuracy, comes from switching to fixed point and then reducing the width to 16 or 8. Or even 4 in some cases.

In fact, during the summit, Cadence's Samer Hijazi had shown some outstanding results with an automated way to explore and find the optimal tradeoff between accuracy and implementation cost (primarily the number of MACs, which is almost the same as power).

Improvement of Processors

When you have a demanding and parallelizable workload, the move from general-purpose to specialized architectures makes improvements of 10X easy. You can get 100-1000X just there at the cost of big penalties in programmability, meaning not the difficulty in programming (although that is an issue too) but the flexibility to implement slightly different algorithms if circumstances change.

As an example of what can be done, Jeff showed Fotonation who have 3M gates processing 30 frames/second at 30mW. To put that in perspective, that is the power of an LED. So they can process video for less power than the red light that shows recording is taking place.

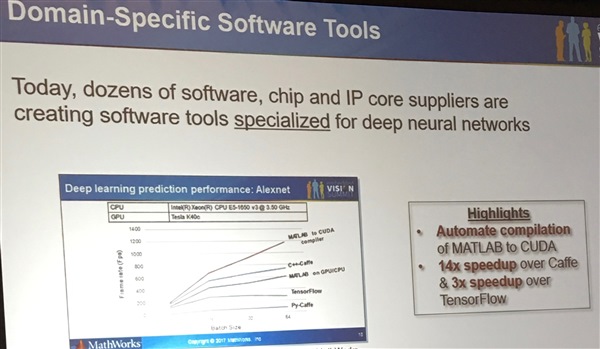

Software Between the Processor and the Algorithm

The algorithm is the top slice of bread, and the processor is the bottom slice. In between is the filling. You can just use software that is completely oriented to NN and optimize out a lot versus general-purpose compilation. If you get domain specific, you can get 3X-14X compared to open-source frameworks such as Caffe and Tensorflow.

The Grand Total

Multiplying it all out, algorithms 2-37 gives 18. Processors 2-37 gives 20. Frameworks 3-14 gives 8. So multiplying them all together gives about 3000X, so Jeff says his claim for 1000X is pretty conservative.

If the algorithms are really stable and you go all the way to custom hardware for a specific application, then there is another factor of about 100X to be had versus a processor-based solution.

Jeff gets to see a lot of new applications in development. He expects many new devices that are inexpensive, battery power, and always on. It's not just compute since low-powered image sensors are also required, but there is exciting stuff happening there, too.

Another way to cut up 1000X in three years is 10X per year. So we should already see an improvement of 10X by Embedded Vision Summit 2018. Which, I'm sure Jeff would want me to tell you, is May 22 to 24, 2018 at the Santa Clara Convention Center. Save the date and I'll see you there.

By Paul McLellan

Editor of Breakfast Bytes, Cadence

The Grand Total

Multiplying it all out, algorithms 2-37 gives 18. Processors 2-37 gives 20. Frameworks 3-14 gives 8. So multiplying them all together gives about 3000X, so Jeff says his claim for 1000X is pretty conservative.

Jeff Bier heads up the Embedded Vision Alliance (and, in his day job, he heads up BDTi). His own presentation was titled 1000X in 3 Years: How Embedded Vision Is Transforming from Exotic to Everyday. His main point is that we are living in a time of unprecedented progress and the cost and power consumption of vision computing will decrease by about 1000X in the next three years.

As he put it, "the future's so bright you've gotta wear shades." Or maybe they are Bier goggles! Let's see where the numbers come from.