Every year we see amazing technology at CES, including innovations in automotive, smart robots, drones, AR/VR, smart home appliances and many other technologies. One of the things that’s exciting to follow is the advancement from expensive, futuristic gadgets to useful, practical devices. This year showed huge progress in that direction, but also included a fair share of over-the-top, just-for-show gadgets. Here is a look at the technology enabling artificial intelligence and computer vision consumer devices to enter the mainstream in the next years.

Robots and virtual assistants can do much more with camera eyes and built-in AI smarts

Voice interfaces have been huge in the past few years, since Amazon’s Echo products were first launched in 2014. This year, it became clear that in order to get to the next level, it must adopt vision and AI technologies at the edge devices. The show included more camera-enabled robots than we can count, but here are a few examples that stood out.

Omron's Forpheus uses AI to play a mean game of ping pong (Image: CEVA)

The robotics company Omron demonstrated its technology in a fun and bouncy way, with a robotic master of ping pong called Forpheus. The robot uses two cameras to track the ball’s position and velocity and applies a proprietary prediction model to calculate the trajectory and keep up a rally with its human opponent. An additional camera tracks the human player’s facial expressions and determines whether or not they are enjoying the game, to ensure an enjoyable game. While this is not meant to be a commercial product, it shows technology that can be applied to a variety of industrial and consumer functions using AI, sensing and advanced robotics.

Not all the demos were as smooth as Forpheus’ table tennis skills. LG’s unveiling of CLOi, the company’s smart home robot, included a few awkward moments in which the robot did not respond to voice commands. The similar looking Jibo showed its social skills which include facial recognition. The device, which has been on sale since October, takes a different approach than the leading smart speakers by being more socially oriented, and interacting on personal level with users. SLAMtec also showed some robots which showcased its Slamware localization and navigation solution, and Zeus, the general purpose robot platform. UbTech Robotics, the company which last year unveiled the Lynx, an Alexa-powered humanoid robot, this year revealed a biped robot that can traverse stairs and play soccer.

|

|

|

From left to right: SLAMtec, Jibo and Lynx vision-based robots (Images: CEVA)

Aibo, Sony’s robotic pet dog from the late 90’s has been revived in a new, far more technologically advanced version. It includes two cameras and multiple sensors so that it can recognize people and react to touch and voice.

In other pet-related innovation, Petcube showed an interactive Wi-Fi pet camera, which enables users to check in on their pets remotely. One of their pet camera models even lets you throw a treat to your pet by swiping your finger.

When will VR take off?

As for the innovation in the VR market, we see this growing steadily but still not exploding as some may expected. And this is mainly due to difficult challenges such as limited computational resources, power consumptions, inside-out tracking and limited content quality.

During CES 2018 HTC announced the HTC Vive Pro, allowing high resolution and low latency, but what is more important is the ability to stream content directly to the headset without the use of cables as most other devices. It looks bigger compared to the HTC Vive and due to the high price tag it is mainly targeting the high end professional users.

Wireless Head Mounted Display (HMD) by HTC Vive Pro (Image: HTC)

A new use of VR technology that might find mainstream consumer appeal is Google’s VR180. This innovative way of capturing images uses stereo camera technology to capture a 3D image, but instead of 360 degrees, which isn’t very convenient for normal viewing situations, it captures 180 degrees. The Lenovo Mirage Camera and Yi Horizon VR180 Camera are two new devices dedicated to snapping in this new format. The captured images can be viewed in 3D with the Google Daydream VR HMD, or in 2D on any screen.

Autonomous Vehicles Steal the Spotlight

For the last few years autonomous vehicles have been one of the biggest attractions at CES. This year, it seems that automotive specialists are already considering self-driving cars as a given, and are now looking to services and applications that will become necessary once humans no longer need to drive. Ford’s CEO, Jim Hackett, for example, described an entire ecosystem powered by autonomous vehicles called “the living street” in his keynote speech. Toyota’s e-Palette concept car also had a similar message, depicting the versality and modularity that vehicles without drivers will have with configurations form mobile casinos and restaurants to ride-sharing services and cargo transport.

In autonomous aviation, Bell Helicopter showed their concept of how autonomous flying will take place as a seamless and connected journey in a taxi-cab-like electric helicopter. Visitors to the Bell booth could try out the concept via a VR headset.

These examples prove that it’s clear to everybody that the autonomous vehicle revolution is happening. The only question is what will our cities look like, once it is completed?

Bell’s Air Taxi gives a glimpse of self-flying helicopters (Image: CEVA)

Intelligence is moving to the Edge

The explosion in artificial intelligence in the last few years is a direct result of internet. In the past, personal computers and handheld devices weren’t powerful enough for deep learning, so big companies, like Google and Amazon, used huge server centers to process data in the cloud. The advantage of this approach is that it enables practically endless computing power, no matter what processor a specific device has. But, the disadvantages are many. First, there is latency of the data transfer, which can change depending on network coverage, not to mention situations where there is no coverage at all. More importantly, privacy and security are weak points of cloud processing. When dealing with sensitive information, it’s always better to keep it on the device and not send it out where it may be vulnerable.

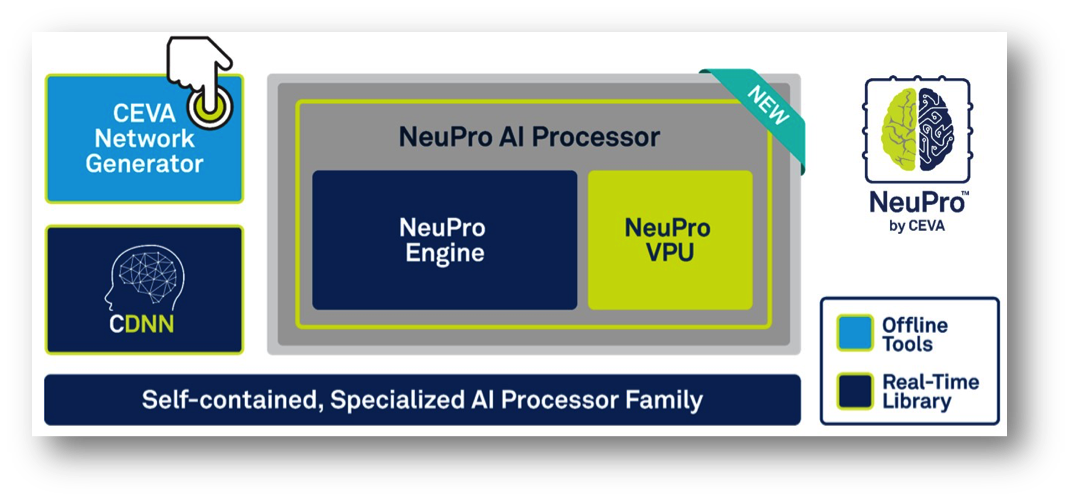

These reasons make it clear that using the cloud for deep learning is only a temporary solution. As soon as embedded platforms can deliver the necessary performance to enable AI, it will be performed on the edge device. You might be wondering when embedded platforms will be powerful enough to do this. The answer is they already are. New flagship phones, like the iPhone X, have embedded neural engines capable of recognizing the user’s face to unlock the phone without sending the information to the cloud. Many other AI features are also available on the device, thanks to powerful and efficient DSPs and dedicated deep learning engines based vector processors. Advanced processes and power saving techniques enables these systems to consume much less power than GPUs and other processors used in remote servers, so even small, battery-powered devices can use AI processors such as NeuPro without relying on the cloud.

NeuPro is a family of self-contained, specialized AI processors for embedded devices (Image: CEVA)

Learn More

Complimented by a suite of software and hardware tools, the NeuPro AI family of processors enables embedded intelligence with a streamlined development cycle. Learn more at NeuPro Family of AI Processors for Deep Learning at the Edge.

By Liran Bar

Director of Product Marketing, Imaging & Vision DSP Core Product Line, CEVA