Demand for computer vision has never been stronger. Propelled by advances in deep learning, a staggering number of devices and applications utilizing vision and artificial intelligence (AI) have hit the market. While employing vision and deep learning in embedded systems poses a challenge, it is becoming a requirement. Here is a look at the variety of markets and use cases in which embedded intelligent vision has become a must-have component.

Markets that require vision and imaging on embedded systems (Image: CEVA)

Mobile Devices Use Multiple Cameras and Sophisticated Imaging Techniques

It might be hard for some to imagine, but about a decade ago having a camera in your phone was a rare thing. Today most phones have at least two cameras, one in the back for regular shots and one in the front for selfies and video calls. There is also a strong trend of dual cameras, like the newly unveiled Asus ZenFone 3 Zoom, which can deliver DSLR-like features, like optical zoom, bokeh and refocus. The image quality on mobile devices is also nearing that of DSLR cameras, with image enhancement techniques like video stabilization, low-light enhancement, super resolution and HDR. A good example is the new ASUS SuperPixel™ technology. Recently we see that smartphone and tablet cameras also support augmented and virtual reality (AR/VR) and 3D modeling. These features are major differentiators in the mobile market. Therefore, there is a constant race bringing new features and significant performance improvements, while using less power for increased battery life.

From Airports to Residences: Video Surveillance Is Crucial

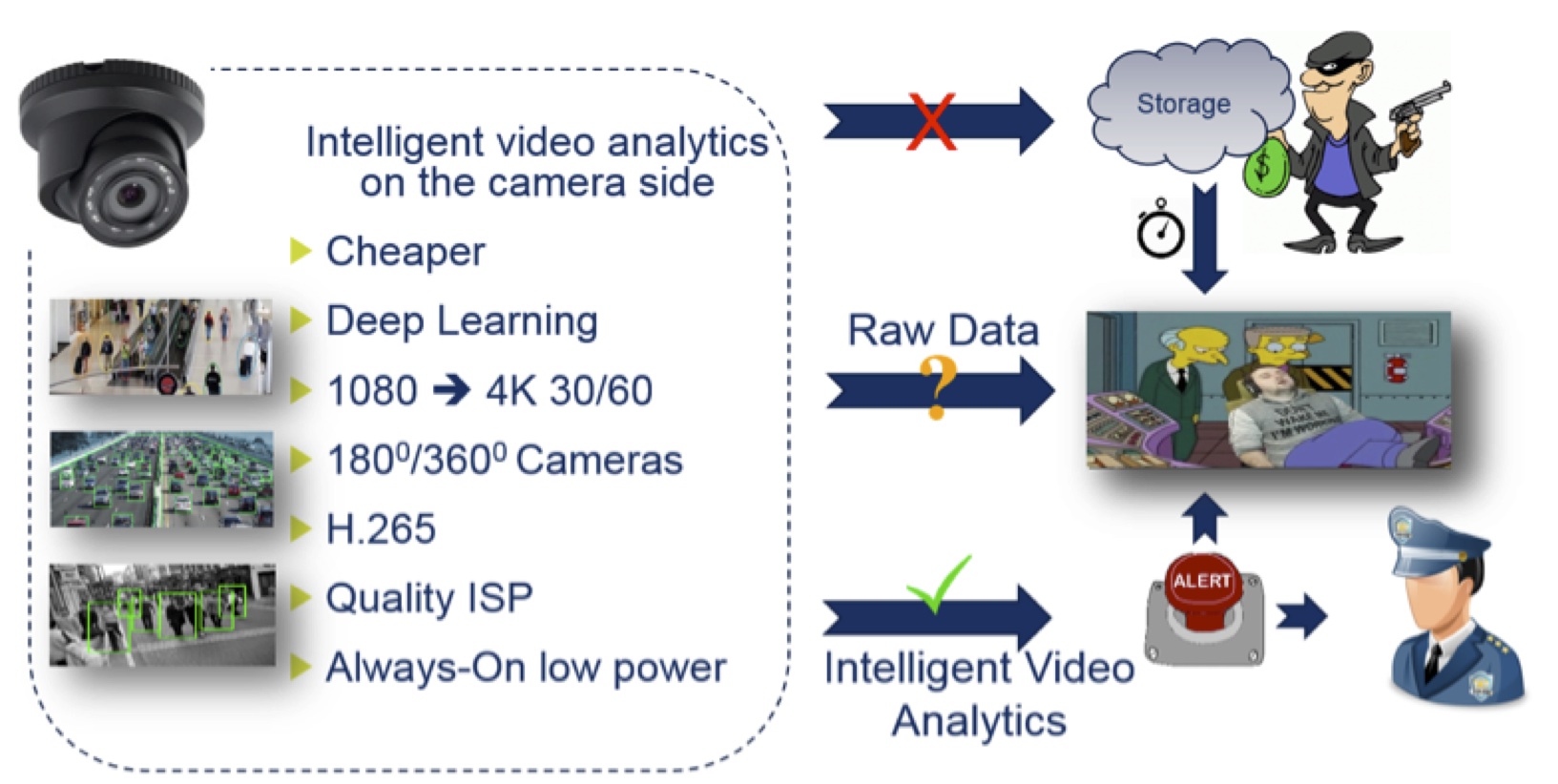

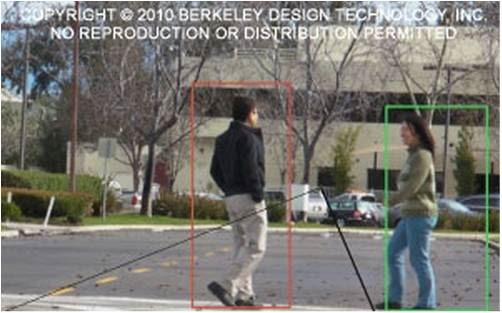

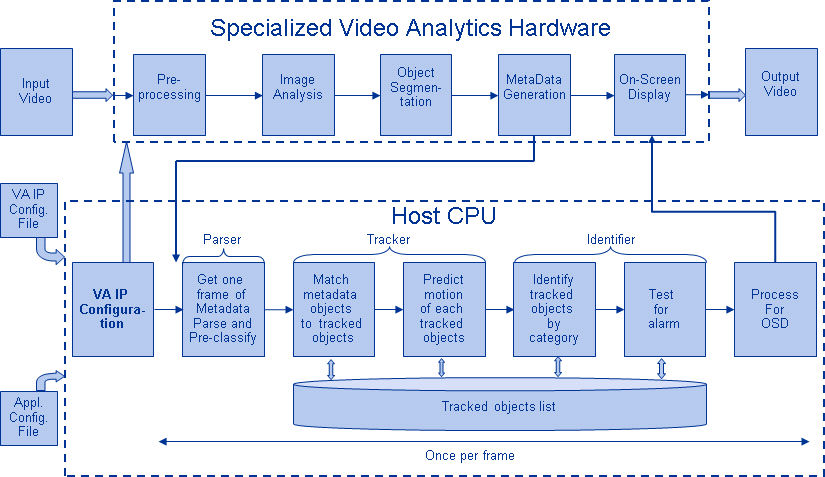

Intelligent video analytics are revolutionizing the security industry. Large facilities like airport terminals have always been at the forefront of surveillance, but now it is becoming cheaper and much more accessible for small residences as well. When dealing with security, it is critical to have real-time video analysis of complex scenes.

In some cases, cloud services are used for analysis, but many cases are better served by analyzing the video stream on the device itself. This is called edge analytics, or edge processing. Delayed response time, vulnerability of sensitive data, and increased data traffic are some of the reasons why edge processing is so important for video analytics.

For battery-powered equipment, one of the biggest challenges is to make sure that the power constraints are kept in check. That’s why a low-power, high-performance embedded system is crucial for this market.

Surveillance Market – Key Imaging Trends (Image: CEVA)

Automotive ADAS Vision Applications

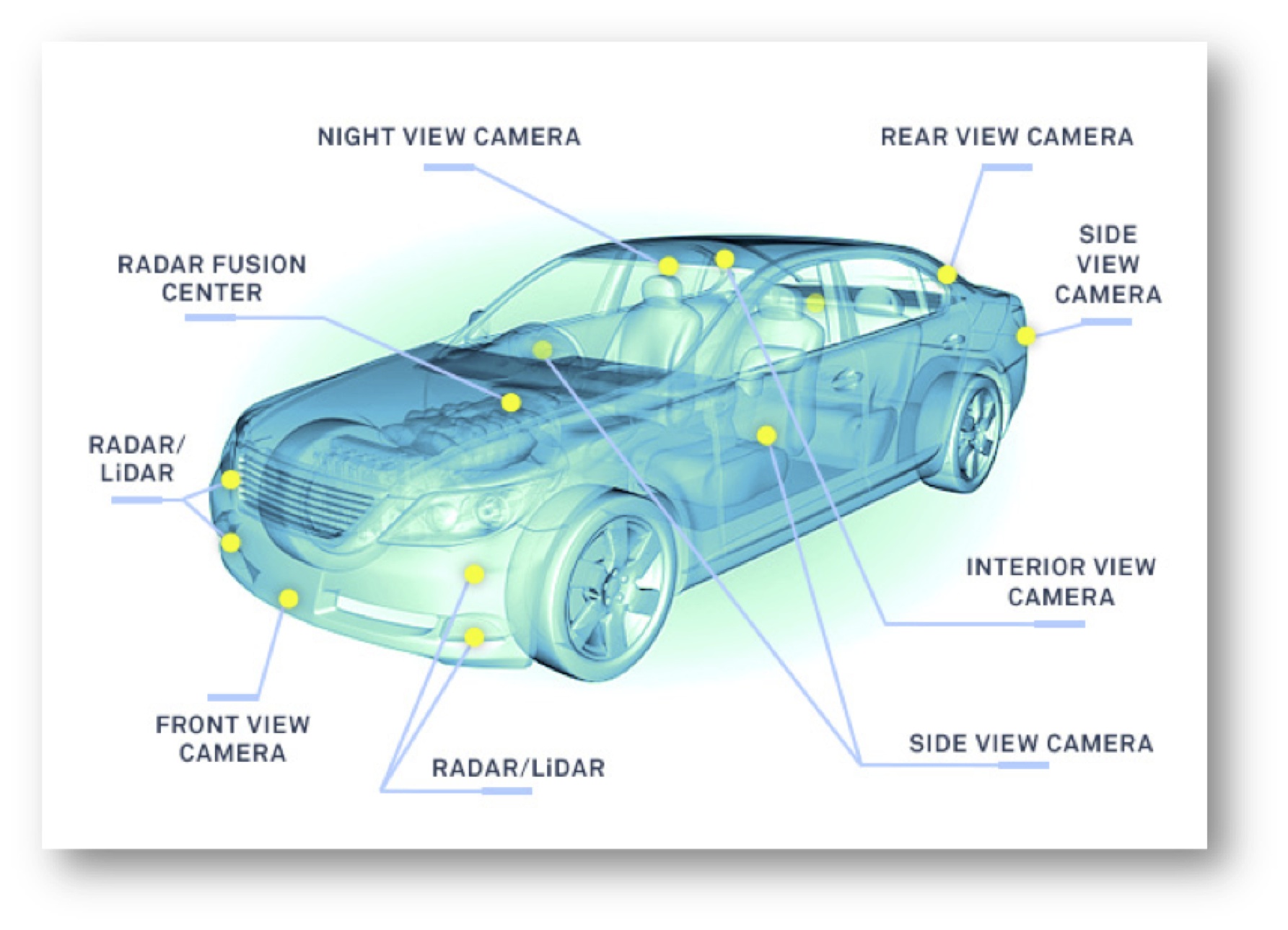

Autonomous cars are already being deployed in many cities for experiments and trial runs. But, until the completely autonomous technology matures, the road will remain mostly populated by highly automated vehicles (HAV), which are supported by ADAS functionality. These functions use computer vision algorithms like image distortion correction, image stitching and more, together with heavy deep learning technology, to assure safety and efficiency.

Here, too, there are many advantages to performing the processing in the camera itself (edge analytics), as opposed to a central super-computer. For a good rundown of the pros and cons of each approach, read this post about choosing the best vision system for automated vehicles.

Automotive ADAS Vision Applications (Image: CEVA)

Some of the ADAS key trends include:

- Flexible solutions to adapt to OEM fast evolving programs

- Major focus on Power/Performance ratio and size reduction

- Strong SW enablement to support investment return

Drones Are Getting More Autonomous and Much Smarter

As drones become ubiquitous, they are also becoming more autonomous and less reliant on user operation. Drones incorporate smart sensors and systems that expand computer vision and machine learning capabilities. Some of the top features that are in high demand are “Follow me” and “Point of interest”. These capabilities enable the drone to automatically “lock on” to a moving subject. To perform these functions flawlessly and without human intervention, drones require sense, avoid and navigate skills. If one of these functions is not performed properly, there can be serious consequences, like crashing into obstacles which were not properly analyzed and avoided. This requires high quality vision processing done in real-time on the edge device.

Video of drone crashing into power lines that were not detected by obstacle avoidance (Source: YouTube)

In addition, there is an ever-growing demand for higher resolution video. Today, HD video is taken for granted and 4K or UHD is becoming common, and not only reserved for high-end devices. At the same time, increased flight time is desired, so all the processing needs to be highly efficient. In this diverse market, the solution must also be flexible to enable new features on-line, driven by client demand and changing market dynamics.

Vision is Becoming Pervasive in IoT

As evidenced by CES 2017, the IoT market is red hot. The smart home is no longer a trend, but a real market segment with many pragmatic and useful products. Far-field voice activated virtual assistants, like Amazon Echo and Google Home, were omnipresent at CES. At the same time, there were many smart home devices like smart camera-equipped LED lightbulbs and 360 degree cameras which use vision technology like face recognition, distortion correction and image stitching. The next step will be the integration of voice activated assistants and vision technology to perfect the smart home experience.

360 Degree Camera Devices (Image: CEVA)

Augmented and Virtual Reality are Continuing to Grow

Another strong presence at CES were some of the pragmatic use cases that AR/VR have found beyond gaming, which only appeals to a relatively small market segment. One example of a use case with wider appeal is using VR to attend a live sports event. The combination of 360 photography and directional audio relayed in real time over high speed 5G internet gives a full immersive experience, as if you’re there. Last year’s Pokémon Go craze made it clear that millions of potential users are just waiting for opportunities to use this technology. Finding the right use cases and packaging them properly will surely be lucrative in this field.

Learn more

Here at CEVA, we take our position as the industry leader in programmable IP imaging and vision engines very seriously. That’s why we offer not only the top vision processing IP, but also the best frameworks to streamline the development of a comprehensive embedded solution. This includes a scalable architecture with a toolkit providing push-button porting of neural networks, real-time software libraries, hardware accelerators and much more. All these are designed to ensure a smooth transition to an embedded environment, and minimize the time to market for our customers.

To find out more about CEVA’s ultimate deep learning and artificial intelligence embedded platform, click here.

For more details on how to build your own embedded intelligent vision solution, check out my post about Generating an Embedded Neural Network at The Push of a Button. You can also tune in to our on-demand webinar on Deep Learning on Embedded Systems.

By Liran Bar

Director of Product Marketing, Imaging & Vision, CEVA.