This blog post was originally published at CEVA's website. It is reprinted here with the permission of CEVA.

Immediately following the announcement of the iPhone 7 Plus, Apple’s first dual camera phone, commentators (like this post from Wired and this post from EETimes) declared that dual cameras are the new norm for smartphone photography. That’s how much sway Apple has. As I speculated in my August 2015 post, this feature was anticipated since Apple acquired a company specializing in multi-camera technology. It’s not that Apple was the first (for example, HTC, LG and Huawei have had dual camera phones out for some time now), but Apple is the bellwether in this domain, and the differentiator between nice-to-have features and mainstream standards.

Two Lenses are Better than One

The full potential of dual cameras is yet to be unleashed

While it’s probably true that other OEMs will follow suit and offer dual cameras in future models, the transition is far from trivial. Throwing an additional lens and sensor into the device is the easy part. The real challenge is generating the full spectrum of features that dual cameras can provide, find the relevant processing power on the phone and be able to do that without quickly draining the battery. Apple for example says they improved the image signal processor and supply no further details on what if anything was added to support these features from the hardware perspective.

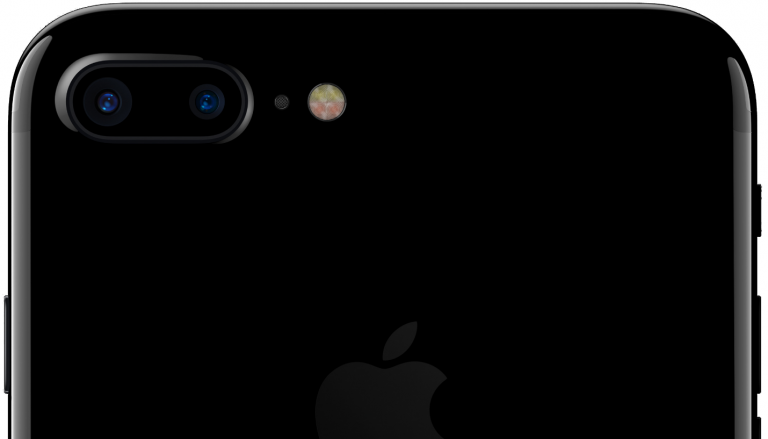

Apple iPhone 7 Plus featuring dual camera (Source: Apple)

The potential benefits of the dual camera are many. It can achieve much better zoom than the digital zoom we’re used to, perform fancy photography effects similar to digital single lens reflex (DSLR) cameras, generate a depth map which could then be used for higher quality object recognition or mapping of the environment around the device (using Simultaneous Localization And Mapping or SLAM), and many other tricks. You can read about some of these cool features in the slew of articles published this week, like this one.

Of all these possibilities, the new Apple iPhone 7 Plus basically only offers enhanced zoom and depth-of-field effect to help generate an image bokeh effect, which is used to show the subject of the photograph sharp and in-focus while the rest of the scene is blurred. With the small lenses and sensors in smartphones, this is practically impossible to achieve with a single camera. The iPhone 7 web page boasts that using the dual camera combined with advanced machine learning, it will be easy to create this effect on the iPhone 7 Plus. But, the interesting thing about this exciting feature is that it is declared “coming soon” by Apple, and will only be available in the next software update. In addition, the effect will only be available for portraits, as the algorithms are based on Apple’s facial recognition software. This emphasizes how difficult the task of generating this effect and other machine learning software features really is.

Bokeh effect will be available on the iPhone 7 Plus in the next software update, and include only portraits

One of the questions that I find most intriguing is why is this feature only included in the iPhone 7 Plus, and not the iPhone 7, is there more here than just to justify the higher price of the Plus version? This is not the first time that the Plus version has a slightly better photo setup. The iPhone 6 Plus included optical image stabilization, which was not included in the smaller iPhone 6. My guess for these differences is that since the larger form factor allows for a larger battery, this enables heavier processing. The mechanical OIS in the 6 Plus and the computational effort in the dual camera in the 7 Plus are both intense power consumers. To enjoy these features and still have enough power for the phone requires either extremely efficient processing, or extra battery size. If my guess is right, this is an additional indication at how important an efficient processor is to unleashing the potential of dual cameras, and implementing them in smaller smartphones as well as the large ones.

The next step: dual cameras enabling advances in augmented reality

Recently, I shared a post about Pokémon Go and breaking the barrier of bringing augmented reality (AR) to the mass market. I maintained that the integration of three technologies – depth perception, SLAM, and deep learning based object recognition – into future devices would be key factors in pushing AR forward to advanced uses like Google Tango. Dual cameras fit perfectly into this scenario, as they enhance the abilities to perform all three. To really penetrate the mass market, dual cameras must really become the norm, as many commentators forecast. But, the physical camera is not enough. The software behind the lens is the real star of the show, and the enabler of the vast possibilities of features and applications. Getting the most out of the dual cameras will require low-power, high-performance dedicated engines. Having the vision engines programmable as well will enable vendors to cope with the rapid changes of the domain and enable deep learning, imaging and intelligent vision software algorithms to run concurrently.

Find out more

The award winning CEVA-XM4 is an excellent candidate for unleashing the potential of dual cameras. To learn more about this intelligent vision processor, click here.

To learn more about CDNN, the real-time software framework for deep learning on the CEVA-XM4, click here.

Yair Siegel

Director of Product Marketing, Multimedia, CEVA